Correlation does not imply causation, as there could be many explanations for the correlation. But does causation imply correlation? Intuitively, I would think that the presence of causation means there is necessarily some correlation. But my intuition has not always served me well in statistics. Does causation imply correlation?

ACCEPTED]

ACCEPTED]

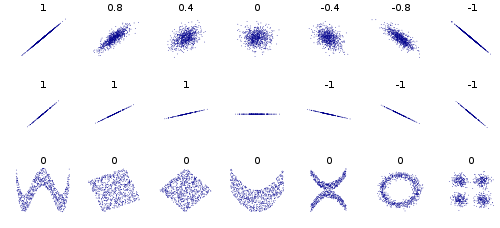

As many of the answers above have stated, causation does not imply linear correlation. Since a lot of the correlation concepts come from fields that rely heavily on linear statistics, usually correlation is seen as equal to linear correlation. The wikipedia article [1] is an alright source for this, I really like this image:

Look at some of the figures in the bottom row, for instance the parabola-ish shape in the 4th example. This is kind of what happens in @StasK answer (with a little bit of noise added). Y can be fully caused by X but if the numeric relationship is not linear and symmetric, you will still have a correlation of 0.

The word you are looking for is mutual information [2]: this is sort of the general non-linear version of correlation. In that case, your statement would be true: causation implies high mutual information.

[1] http://en.wikipedia.org/wiki/Correlation_and_dependenceThe strict answer is "no, causation does not necessarily imply correlation".

Consider $X\sim N(0,1)$ and $Y=X^2\sim\chi^2_1$. Causation does not get any stronger: $X$ determines $Y$. Yet, correlation between $X$ and $Y$ is 0. Proof: The (joint) moments of these variables are: $E[X]=0$; $E[Y]=E[X^2]=1$; $${\rm Cov}[X,Y]=E[ (X-0)(Y-1) ] = E[XY]-E[X]1 = E[X^3]-E[X]=0$$ using the property of the standard normal distribution that its odd moments are all equal to zero (can be easily derived from its moment-generating-function, say). Hence, the correlation is equal to zero.

To address some of the comments: the only reason this argument works is because the distribution of $X$ is centered at zero, and is symmetric around 0. In fact, any other distribution with these properties that would have sufficient number of moments would have worked in place of $N(0,1)$, e.g., uniform on $(-10,10)$ or Laplace $\sim \exp(-|x|)$. An oversimplified argument is that for every positive value of $X$, there is an equally likely negative value of $X$ of the same magnitude, so when you square the $X$, you can't say that greater values of $X$ are associated with greater or smaller values of $Y$. However, if you take say $X\sim N(3,1)$, then $E[X]=3$, $E[Y]=E[X^2]=10$, $E[X^3]=36$, and ${\rm Cov}[X,Y]=E[XY]-E[X]E[Y]=36-30=6\neq0$. This makes perfect sense: for each value of $X$ below zero, there is a far more likely value of $-X$ which is above zero, so larger values of $X$ are associated with larger values of $Y$. (The latter has a non-central $\chi^2$ distribution [1]; you can pull the variance from the Wikipedia page and compute the correlation if you are interested.)

[1] http://en.wikipedia.org/wiki/Noncentral-chi-squared-distributionThings are definitely nuanced here. Causation does not imply correlation nor even statistical dependence, at least not in the simple way we usually think about them, or in the way some answers are suggesting (just transforming $X$ or $Y$ etc).

Consider the following causal model:

$$ X \rightarrow Y \leftarrow U $$

That is, both $X$ and $U$ cause $Y$.

Now let:

$$ X \sim bernoulli(0.5)\\ U \sim bernoulli(0.5) \\ Y = 1- X - U + 2XU $$

Suppose you don't observe $U$. Notice that $P(Y|X) = P(Y)$. That is, even though $X$ causes $Y$ (in the non-parametric structural equation sense) you don't see any dependence! You can do any non-linear transformation you want and that won't reveal any dependence because there isn't any marginal dependency of $Y$ and $X$ here.

The trick is that even though $X$ and $U$ cause $Y$, marginally their average causal effect is zero. You only see the (exact) dependence when conditioning both on $X$ and $U$ together (that also shows that $X\perp Y$ and $U\perp Y$ does not imply $\{X, U\} \perp Y$). So, yes, one could argue that, even though $X$ causes $Y$, the marginal causal effect of $X$ on $Y$ is zero, so that's why we don't see dependence of $X$ and $Y$. But this just illustrates how nuanced the problem is, because $X$ does cause $Y$, not just in the way you naively would think (it interacts with $U$).

So in short I would say that: (i) causality suggests dependence; but, (ii) the dependence is functional/structural dependence and it may or may not translate in the specific statistical dependence you are thinking of.

Essentially, yes.

Correlation does not imply causation because there could be other explanations for a correlation beyond cause. But in order for A to be a cause of B they must be associated in some way. Meaning there is a correlation between them - though that correlation does not necessarily need to be linear.

As some of the commenters have suggested, it's likely more appropriate to use a term like 'dependence' or 'association' rather than correlation. Though as I've mentioned in the comments, I've seen "correlation does not mean causation" in response to analysis far beyond simple linear correlation, and so for the purposes of the saying, I've essentially extended "correlation" to any association between A and B.

Adding to @EpiGrad 's answer. I think, for a lot of people, "correlation" will imply "linear correlation". And the concept of nonlinear correlation might not be intuitive.

So, I would say "no they don't have to be correlated but they do have to be related". We are agreeing on the substance, but disagreeing on the best way to get the substance across.

One example of such a causation (at least people think it's causal) is that between the likelihood of answering your phone and income. It is known that people at both ends of the income spectrum are less likely to answer their phones than people in the middle. It is thought that the causal pattern is different for the poor (e.g. avoid bill collectors) and rich (e.g. avoid people asking for donations).

The cause and the effect will be correlated unless there is no variation at all in the incidence and magnitude of the cause and no variation at all in its causal force. The only other possibility would be if the cause is perfectly correlated with another causal variable with exactly the opposite effect. Basically, these are thought-experiment conditions. In the real world, causation will imply dependence in some form (although it might not be linear correlation).

There are great answers here. Artem Kaznatcheev [1], Fomite [2] and Peter Flom [3] point out that causation would usually imply dependence rather than linear correlation. Carlos Cinelli [4] gives an example where there's no dependence, because of how the generating function is set up.

I want to add a point about how this dependence can disappear in practice, in the kinds of datasets that you might well work with. Situations like Carlos's example are not limited to mere "thought-experiment conditions".

Dependences vanish in self-regulating processes. Homeostasis, for example, ensures that your internal body temperature remains independent of the room temperature. External heat influences your body temperature directly, but it also influences the body's cooling systems (e.g. sweating) which keep the body temperature stable. If we sample temperature in extremely fast intervals and using extremely precise measurements, we have a chance of observing the causal dependences, but at normal sampling rates, body temperature and external temperature appear independent.

Self-regulating processes are common in biological systems; they are produced by evolution. Mammals that fail to regulate their body temperature are removed by natural selection. Researchers who work with biological data should be aware that causal dependences may vanish in their datasets.

[1] https://stats.stackexchange.com/a/26370/57345The answer is: Causation does not imply (linear) correlation.

Assume we have the causal graph: $X \rightarrow Y$, where $X$ is a cause of $Y$, such that, if $X < 0$ we have $Y=X$ and else (if $X \geq 0$) we have $Y=-X$.

Clearly, $X$ is a cause of $Y$. However, when you compute the correlation between instances of $X$ and $Y$, e.g., for the points $X$=[-5,-4,-3,-2,-1,0,1,2,3,4,5] and $Y$=[-5,-4,-3,-2,-1,0,-1,-2,-3,-4,-5], then the correlation $corr(X,Y)$ will be 0, even though there exists a true causal mechanism relationship between $X$ and $Y$, for which the value of $Y$ is solely determined by the value of $X$.

You can try it out here: https://ncalculators.com/statistics/covariance-calculator.htm using the above data vectors.

I add a less statistically technical answer here for the less statistically inclined audience:

One variable (let's say, X) can positively influence another variable (let's say, $Y$), while not being associated with $Y$, or even being negatively associated with $Y$, if there are confounding factors that distort the association between $X$ and $Y$.

For example, suppose that the very best doctors are put in wards with the highest needs patients. While the quality of doctors itself has a positive influence on reducing death rates of patients, the quality of doctors could actually be negatively correlated with death rates, because of the confounding variable of the needs of the patients.